Which is Better? User Research with A/B Testing

When developing a new product, whether that be a physical object or a website, multiple versions are created and certain parts are kept, changed, or dropped entirely. Throughout the process, a valuable method to determine which features are effective as they are and which aren’t is A/B Testing.

What is A/B Testing?

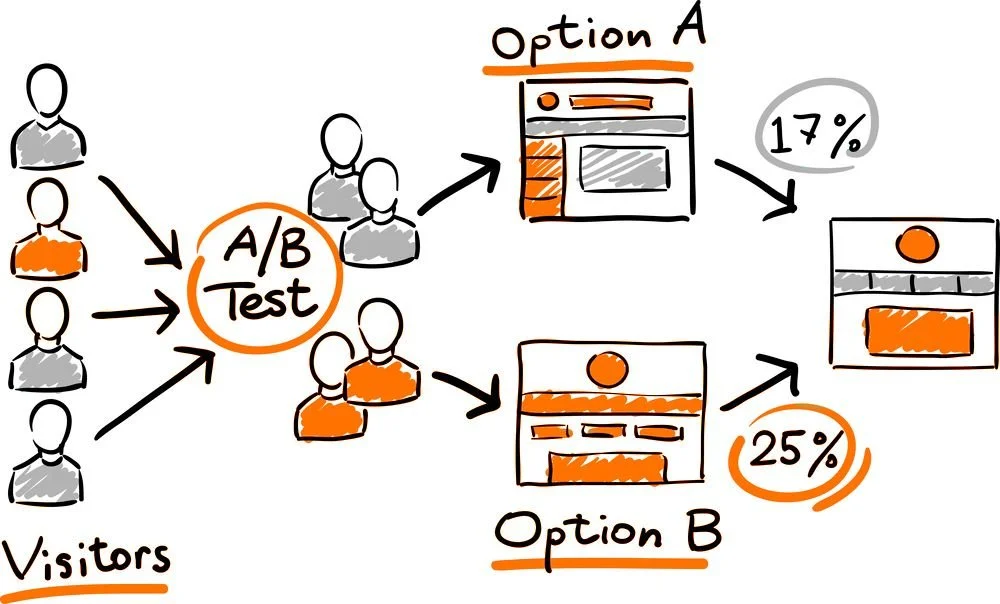

A/B Testing, also known as split testing, is a research method used to compare, usually, two versions of something by splitting people into two groups. One group of testers are exposed to Version A while the other group has Version B. By observing how each group interacts with the product, researchers are able to determine which parts are most effective and combine the results into a better version.

How do you do A/B Testing?

If trying to test a web page, there are multiple online services such as Crazy Egg, Optimizely, and Omniconvert. In essence, they redirect visitors to one of however many website variations there are. This allows the researchers to see how a random selection of people interact with the different versions. They can track stats like click through rates, bounce rates, and where on the page people interact with the most.

How has A/B Testing been used?

A/B Testing is used in a variety of fields to gather direct data that assists in decision making. It is widely used in UI and UX research.

For example, in their paper, Do Computers Sweat? The Impact of Perceived Effort of Online Decision Aids on Consumers’ Satisfaction With the Decision Process, Nada Nasr Bechwati and Lan Xia investigated how satisfied users were with a search process by how much effort they felt an online decision aid saved them. In one of their studies, they had participants attempt a fake job search with some of them (Group A) being updated on progress as they waited (“‘The search engine has already searched 30% of the database and is now going through the remaining 70%. Please wait.’”) while others (Group B) were not.

Bechwati and Xia state that “online shoppers ’satisfaction with the decision process was found to be positively associated with their perception of the effort saved for them by electronic aids. Moreover, informing shoppers about search progress led to a higher level of perceived saved effort and, consequently, satisfaction. This finding implies that using cues that make online search more salient to consumers could lead to higher level of satisfaction with the aid’s performance” (Bechwati, Xia).

By creating two groups of users, the researchers were able to compare the results of showing and not showing search progress. This provides valuable information for web designers and businesses to improve user experience and maintain customers.